Achieving idempotency in the AWS serverless space

Recently I experienced something that you usually hear about when going through resources around cloud and distributed systems, which is everything fails and you need to plan for it. This doesn’t just mean infrastructure failure but also software failure. Now, if you ever went through any of the AWS messaging services documentation like SNS and SQS, I am pretty sure you’ve read somewhere in the documentation that they ‘promise’ at least one message delivery and they don’t guarantee exactly one. This means, your job as a developer is to make sure to guarantee that safety because even AWS resources can fail.

It all comes to “everything fails and you need to plan for it” when I recently encountered an issue that idempotency was the key to solve it. Let me first try to define idempotency in my own understanding and learning. It is when a functionality is invoked multiple times with the same input results in different outputs after each invocation. In this case, a message that holds the same values resulted in multiple documents

How I experienced it

The setup I was working with was the following…

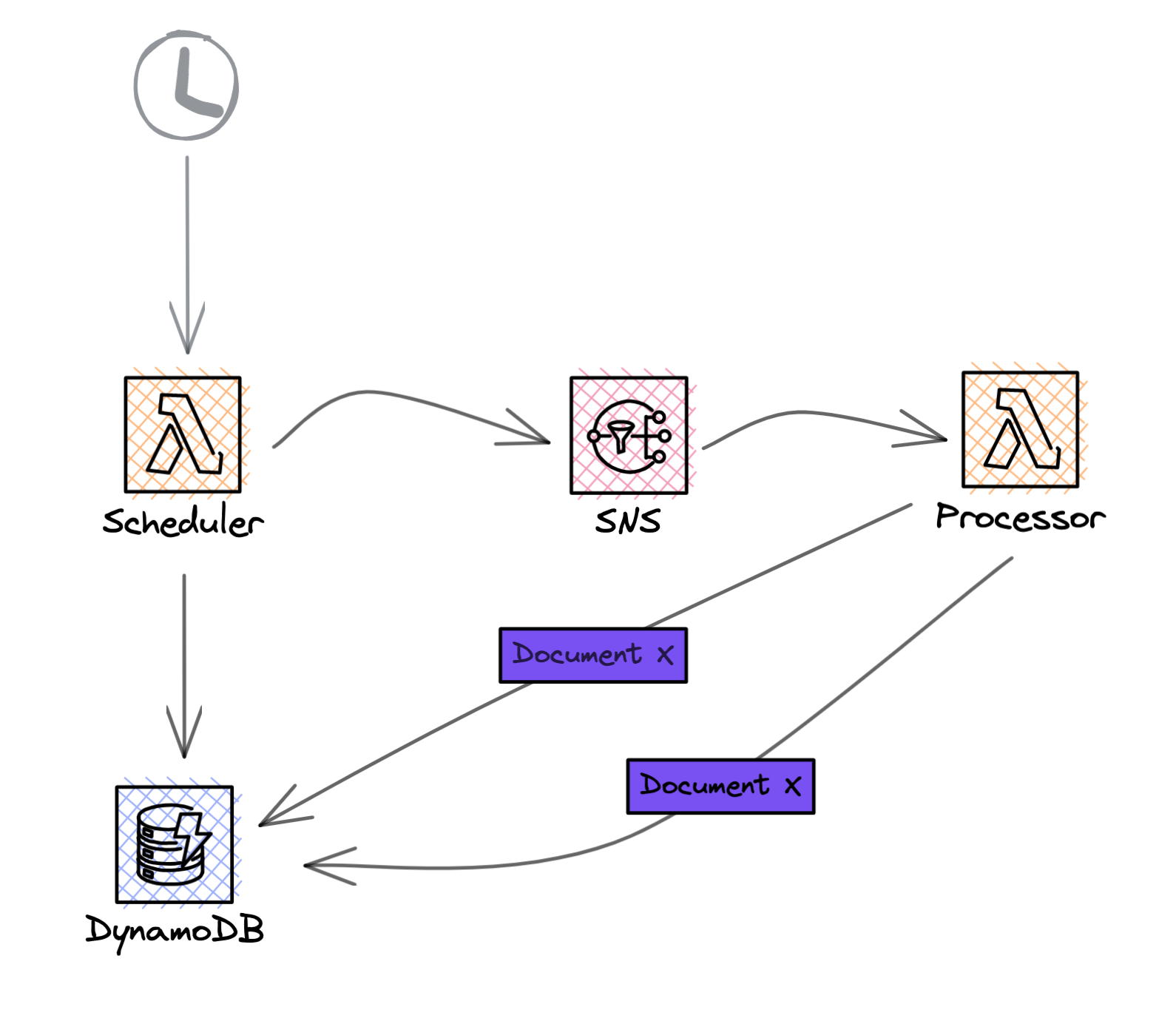

A Lambda that is scheduled every day to be invoked queries a bunch of data from DynamoDB. It will publish a message to an SNS topic to which another Lambda function is subscribed and will process the message, at the end of the process of each message one single document should be stored in the table based on the message data it received.

The implementation here failed me and it’s because I didn’t have a couple of things in mind that revolve around “things fail all the time”. A message can be received more than once in rare situations, but also modeling the document without ensuring uniqueness & achieving idempotency for such business process. The unique document that should’ve been stored in the database once was stored twice.

Figure 1: One event record results in two documents “of the same kind” stored in the database

A step towards idempotency

Now the cool thing is that with some tweaks I was able to eliminate this issue, I hope. At the time I hadn’t any clue what caused this issue whether the scheduler was sending two different messages with the same record because of data pollution, or was it that the message was being received more than once by the processor because SNS was having issues, or the processor had some edge case that causes it to process the same message twice. When in doubt, apply all types of duct tapes until it’s fixed. The approaches I took were several.

FIFO Topics & Queues

Starting from the messaging service. The cool thing is SNS has a capability that could help in decreasing the chances of receiving the same message more than once, and that is by using an SNS FIFO topic. FIFO topics have the capability of message-deduplicating delivery. You configure it to deduplicate based on the content of the message that is being published on the topic or provide a deduplication ID. In my case, the message is always unique, it consists of two properties that hold UUIDs for two different types of documents that combined make them unique. In this case, I went for deduplication based on the content of the message.

Distributed systems (like SNS) and client applications sometimes generate duplicate messages. You can avoid duplicated message deliveries from the topic in two ways: either by enabling content-based deduplication on the topic, or by adding a deduplication ID to the messages that you publish. With message content-based deduplication, SNS uses a SHA-256 hash to generate the message deduplication ID using the body of the message. After a message with a specific deduplication ID is published successfully, there is a 5-minute interval during which any message with the same deduplication ID is accepted but not delivered. If you subscribe a FIFO queue to a FIFO topic, the deduplication ID is passed to the queue and it is used by SQS to avoid duplicate messages being received.

— Introducing Amazon SNS FIFO — First-In-First-Out Pub/Sub Messaging

To achieve exactly-once message delivery some conditions must be met

An SQS fifo queue is subscribed to the SNS fifo topic

The SQS queue processes the messages and deletes them before the visibility timeout

There is no message filtering on the SNS subscription

There must be no network disruptions that could prevent the message received acknowledgment

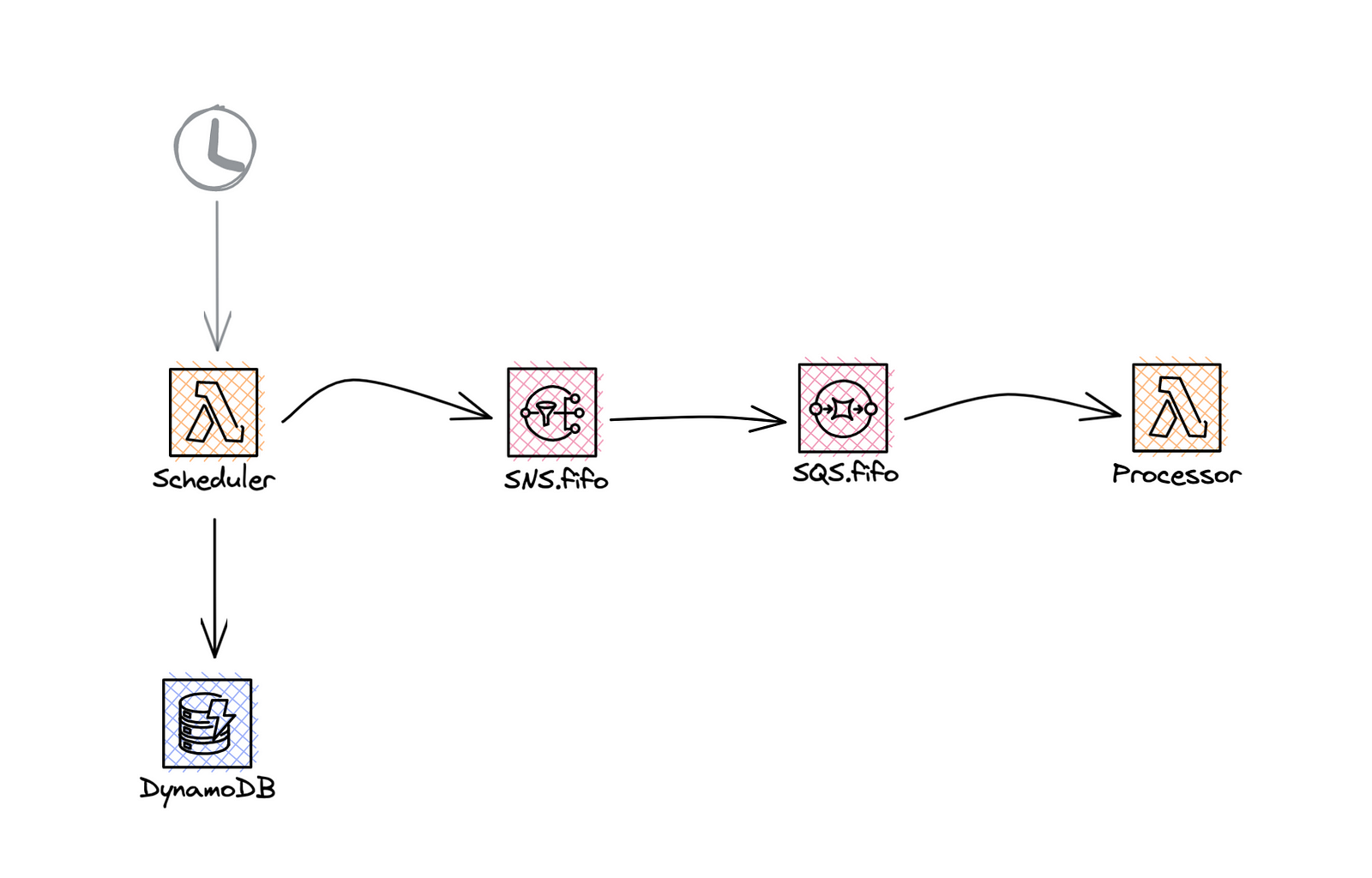

All of the conditions can be met by the configurations we make, except for the last one “There must be no network disruptions” which we will take care of in the next section. With some adjustments to our architecture, it should look like Figure 2.

Figure 2: Adding both a FIFO SNS topic & SQS in front of the lambda to handle message deduplication

Below in the code snippet, we’re passing a value to MessageDeduplicationId property that is composed of two values that will always be uniquely combined.

import SNS from 'aws-sdk/clients/sns';

import { format } from 'date-fnz/fp';

const sns = new SNS({ region: process.env.AWS_REGION });

const xValue = "x_unique_value";

const yValue = "y_unique_value";

await sns.publish({

TopicArn: process.env.MY_FIFO_TOPIC_ARN,

Message: JSON.stringify({ x: xValue, y: yValue}),

MessageDeduplicationId: `${xValue}#${yValue}`,

}).promise();

Using the serverless framework, the IaC should look something like the following

MyFifoSNSTopic:

Type: AWS::SNS::Topic

Properties:

FifoTopic: true

ContentBasedDeduplication: true

TopicName: MyFifoSNSTopic.fifo

MyFifoSQSQueue:

Type: AWS::SQS::Queue

Properties:

FifoQueue: true

ContentBasedDeduplication: true

QueueName: MyFifoSQSQueue.fifo

MySNSTopicSubscription:

Type: AWS::SNS::Subscription

Properties:

RawMessageDelivery: true

TopicArn:

Ref: MyFifoSNSTopic

Protocol: sqs

Endpoint:

Fn::GetAtt:

- MyFifoSQSQueue

- Arn

MySQSSNSPlicy:

Type: AWS::SQS::QueuePolicy

Properties:

Queues:

- Ref: MyFifoSQSQueue

PolicyDocument:

Version: "2012-10-17"

Statement:

- Action: SQS:SendMessage

Effect: Allow

Principal:

Service: "sns.amazonaws.com"

Resource:

- Fn::GetAtt:

- MyFifoSQSQueue

- Arn

Condition:

ArnEquals:

aws:SourceArn:

Ref: MyFifoSNSTopic

There is a caveat though, and that is the deduplication only happens within a 5 minute interval. Simply put, if the same message (same deduplication ID or message content) has been sent more than once outside a 5-minute window where a previous one has been sent, SNS & SQS won’t deduplicate it for us and will consider it as a unique message that was not sent before. This could also be tackled in the next section.

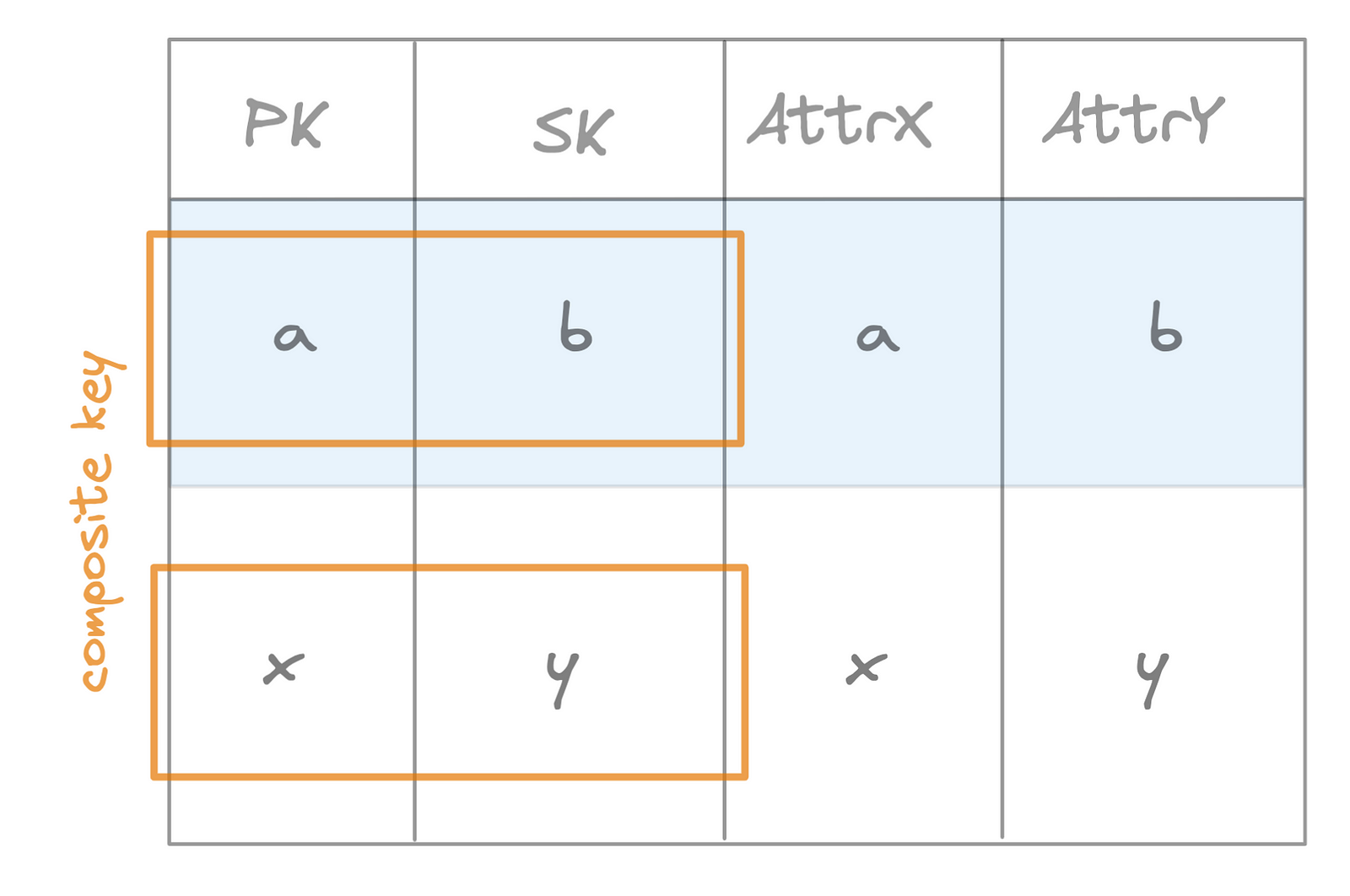

DynamoDB Composite Keys

Taking things one step further to make sure that we’re only going to store one single document by taking advantage of the composite keys capability that can be achieved in DynamoDB. A combination of a partition key & sort key attribute values on the table are considered as a primary key on DynamoDB, which means no other document with the same PK & SK combined values can exist.

Figure 3: Composite keys on a DynamoDB table having unique values on the partition and sort keys

The nice thing about composite keys is, if anything happens during the publishing & consuming of the message and we receive the message more than once, the composite key will guarantee idempotency as a last resort when storing the document in the table. In the code snippet below, we’re passing a ConditionExpression, although this is not required since DDB will reject the put request if the composite key already exists, to understand the reason behind the put failure I can decide what would be the next steps the execution flow to happen. In this case, I just want to exit safely to signal to the SQS queue that the message has been successfully processed and the queue will delete the message.

import type { SQSEvent } from 'aws-lambda';

import { DocumentClient } from 'aws-sdk/clients/dynamodb';

const ddbClient = new DocumentClient({ region: process.env.AWS_REGION });

export default = (event: SQSEvent) => {

for (const record in event.records) {

try {

const { x, y }: { x: string; y: string } = JSON.parse(record.body);

await client.put({

TableName: 'my-table',

Item: {

id: ':id',

sk: ':sk',

},

ConditionExpression: 'attribute_not_exists(#sk)',

ExpressionAttributeValues: {

':id': x,

':sk': y

},

ExpressionAttributeNames: {

'#sk': 'sk'

},

}).promise();

} catch (error) {

if (error.code === 'ConditionalCheckFailed') {

// Don't retry the message if the document with the composite key already

// exists in the table, rather let the message considered to be processed

// successfuly and to deleted by the SQS queue

return;

} else {

throw error;

}

}

}

}

Conclusion

Idempotency can be ensured in multiple ways. One of them is what we have discussed in this article, by making use of FIFO topics and queues to deduplicate the same message within a certain time interval. To guarantee idempotency to the data source layer is with the usage of the DynamoDB composite keys won’t allow storing of the same document with a composite key that already exists.

⚠️ This is from my own learnings and understandings, would appreciate any feedback & improvement for the described solution in this article